Orchestrating our UI test suite with Maestro

How we nailed reliability, halved costs, and improved runtime performance of our tests

A robust and reliable test suite is critical in ensuring we ship features with high quality and confidence, and can iterate quickly.

Recently, on the Android team at Doist, we found ourselves in a situation where our UI test suite had become so flaky that it was significantly slowing down our delivery. Instead of addressing problems one by one, we took the opportunity to migrate our end-to-end tests to Maestro .

By the end of the migration we reached 99%+ reliability, 50% cost reduction, and a 4x runtime speed up. In this article, we’ll go over our UI testing setup, why we adopted Maestro, and what we’ve learned, so you can too.

What our testing catalog looks like

The Todoist Android app has a comprehensive catalog of both unit and end-to-end tests. Since end-to-end tests are slow to run, we reserve them for our core workflows: critical user journeys in our client apps that must work reliably at all times for our customers. Our list of core workflows is continuously reviewed and updated by our Customer Experience team and squads working on new features. All client teams at Doist cover these core workflows with end-to-end tests to ensure their correctness.

Until early 2025, our core workflows were covered by Espresso tests that ran on PRs via Firebase Test Lab .

We used Kaspresso , which is essentially a wrapper on top of Espresso & UI Automator framework and provides some helpful additional features, most importantly, its built-in protection against flaky tests (the irony). For the tests themselves, we relied on Kakao , which offers a DSL for cleaner & more readable interactions with Espresso. Additionally, we also wrote our own abstractions for actions and assertions to make it relatively easy to write complex tests, while keeping the test code easy to follow.

Here’s what some of our espresso tests look like with this setup:

@Test

fun reorder() = run {

moveItem(5, 1)

itemHierarchyIs(

"Item 0",

" Item 1",

"s:Section 1",

"Item 4",

"Item 5",

"s:Section 0",

"Item 2",

"Item 3",

)

}

@Test

fun renameSection() = run {

openSectionOverflowMenu(2)

clickRenameSection()

replaceSectionName("Section X")

submitSection()

itemHierarchyIs(

"Item 0",

" Item 1",

"s:Section X",

"Item 2",

"Item 3",

"s:Section 1",

"Item 4",

"Item 5",

)

}

@Test

fun deleteSection() = run {

openSectionOverflowMenu(2)

clickDeleteSection()

clickDialogPositiveButton()

itemHierarchyIs(

"Item 0",

" Item 1",

"s:Section 1",

"Item 4",

"Item 5",

)

}

While Kakao, Kaspresso and our abstractions made our actual test code easier to reason with, the positives ended there.

Enter flakiness

To make Espresso “work” for a complex app like Todoist, we’ve had to do a lot of heavy lifting internally with our test suite, which includes, but is not limited to

- Writing custom

IdlingResources - Maintaining a custom

JUnitrunner that- Disables animations & UI delays

- Disables soft keyboards

- Extends the long-press timeouts by executing shell commands

- Writing custom synchronization wrappers for our reactive data streams.

All of this was an attempt to provide “perfect synchronization” for Espresso in the context of our tests, but, as is the case with most modern, large-scale Android apps, perfectly synchronizing the app across test actions is extremely difficult, if not impossible.

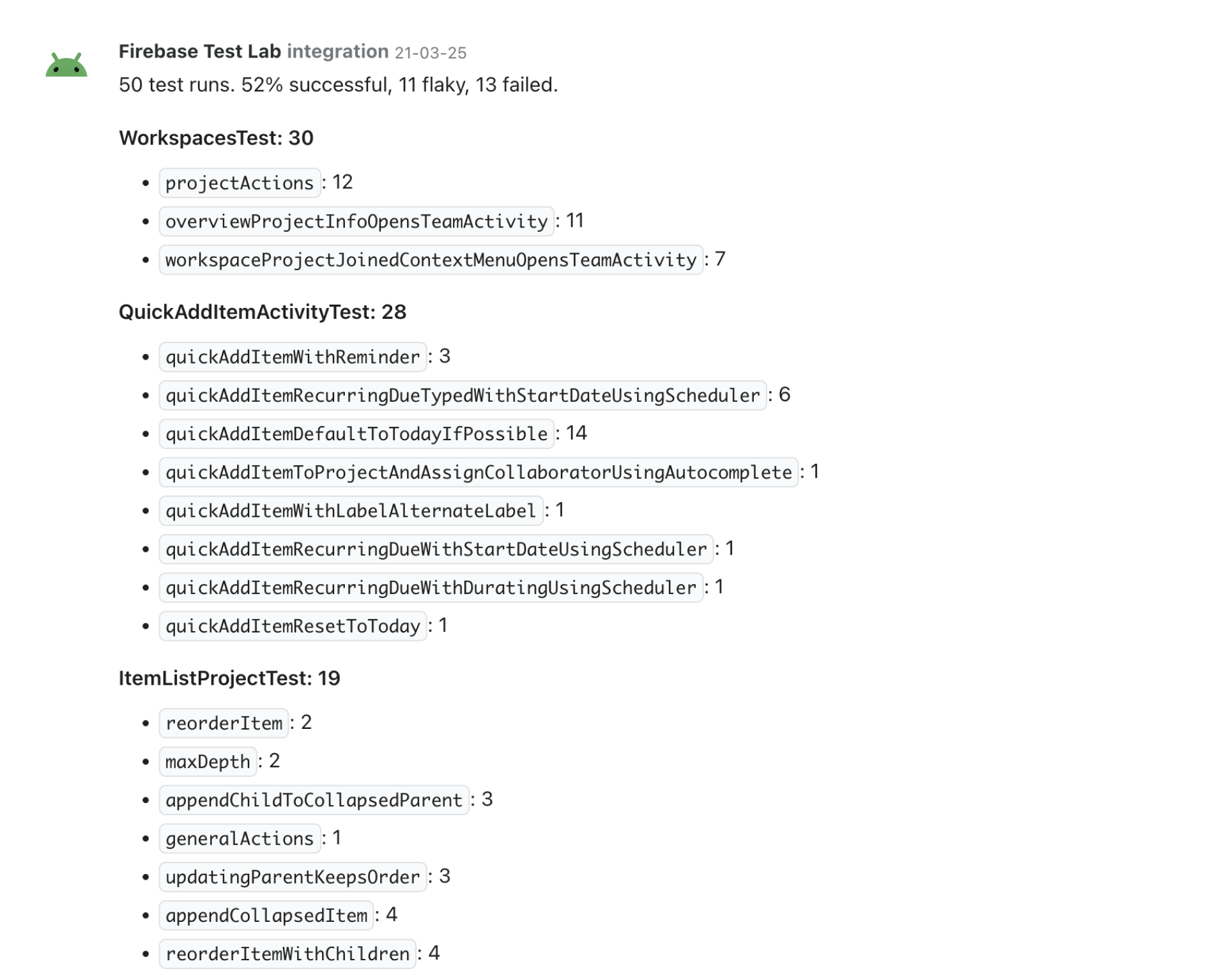

As a result, over the course of last year, the flakiness of our test suite kept growing to the point that, on a good day, only about half of our runs passed on the first try.

Because our test suite was so unreliable, engineers would often rerun it multiple times on their PRs before investigating whether it was their code that had actually broken the tests. With an average running time of ~35 minutes across N reruns for the entire suite, our PRs would remain pending for over an hour before we could merge.

This was a massive time sync and it discouraged us from increasing our test coverage.

Understanding the root cause

While it’s easy to attribute our flakiness to “Espresso being unstable,” the reality is more nuanced. Espresso itself isn’t inherently flaky, the issue stems from the architectural requirement for explicit synchronization in complex, async-heavy applications.

The Espresso Synchronization Contract

Espresso can only automatically track operations that post to Android’s MessageQueue (like View drawing). For everything else like Kotlin Coroutines, RxJava streams, network calls, database operations, or custom threading, you must write custom IdlingResources that tell Espresso when these operations start and finish.

Over time, we built an extensive IdlingResource infrastructure:

- CacheIdlingResource: Tracks when our CacheManager finishes loading data

- LocatorArchViewModelIdlingResource: Monitors async operations in all ViewModels

- RecyclerViewIdlingResource: Waits for RecyclerView adapter updates

- HighlightParserIdlingResource: Tracks text parsing in custom EditText widgets

- InteractiveDialogFragmentIdlingResource: Synchronizes with dialog lifecycles

- BottomSheetIdlingResource: Waits for bottom sheets to settle

Here’s what a typical test setup looked like for one of our tests:

@Rule

@JvmField

val rules: RuleChain = RuleChain

.outerRule(Timeout.seconds(30))

.around(TestDataTestRule(testData, testLocator))

.around(composeTestRule)

.around(activityScenarioRule)

.around(

IdlingResourcesRule(

CacheIdlingResource(testLocator),

InteractiveDialogFragmentIdlingResource(

ArchiveSectionDialogFragment.Tag,

android.R.id.button1,

),

InteractiveDialogFragmentIdlingResource(

ArchiveSectionDialogFragment.Tag,

android.R.id.button2,

),

InteractiveDialogFragmentIdlingResource(

DeleteSectionFragment.Tag,

android.R.id.button1,

),

BottomSheetIdlingResource(),

RecyclerViewIdlingResource(android.R.id.list),

),

)

.around(ScreenshotRule())

Each IdlingResource required instrumenting production code, adding idleState properties to ViewModels, adding methods like isParsing() to custom views, and adding callbacks from our cache layer to notify the test infrastructure.

The fundamental issue wasn’t technical implementation; it was the ongoing maintenance burden. We have several examples of this from the past.

The Maintenance Trap

Example 1: Cache layer refactoring:

When we modernized our caching architecture, the CacheIdlingResource had to be rewritten from scratch. Our original implementation used Android’s BroadcastReceiver pattern to listen for cache load completion:

- LocalBroadcastManager.getInstance(instrumentation.targetContext)

- .registerReceiver(

- DataLoadedBroadcastReceiver(),

- IntentFilter(Const.ACTION_DATA_LOAD_FINISHED),

- )

+ CacheManager.onLoaded { callback?.onTransitionToIdle() }This change cascaded through 77 files. Every test that depended on cache synchronization needed updates. If we had missed updating even one test file, it would have started failing intermittently.

Example 2: Text parser synchronization:

Our autocomplete text field performs async parsing to highlight attributes like due dates, labels etc as you type. Getting Espresso to synchronize with this reliably was extremely difficult. It required multiple fixes over several years:

The core problem was that when parsing finished, the UI didn’t immediately update. The parsed results are posted to the UI thread via a Handler, which means updates typically take two event loop cycles to complete: one through the handler and the other through the layout system.

Our first attempt at fixing this was a straightforward check to see if the parsing is done:

class HighlightParserIdlingResource(...) : IdlingResource {

override fun isIdleNow(): Boolean {

val editText = activityRule.activity?.findViewById(viewId) as? AutocompleteHighlightEditText

val idle = editText?.isParsing == false

if (idle) {

callback?.onTransitionToIdle()

}

return idle

}

}This caused race conditions. We discovered that sometimes we were checking idle state before the view had even been added to the screen. Espresso would see isParsing = false and immediately try to interact with the UI before the UI had gone through the full update cycle. Tests would intermittently fail as a result.

To fix this required modifying the production code just to improve test reliability.

public void blockCurrentHighlightsAsync() {

if (mAsyncWorker != null) {

mAsyncWorker.execute(() -> {

// ... parsing logic ...

runOnUiThread(() -> {

if (getHandler() != null) {

// ... Block highlights

}

});

});

+ } else if (!isAttachedToWindow()) {

+ // Retry when this view is attached to window.

+ this.post(this::blockCurrentHighlightsAsync);

}

}

Down the line, we encountered more issues. We realized that Espresso was checking the idle state too infrequently, causing tests to slow down considerably. To fix this we added aggressive polling:

class HighlightParserIdlingResource(...) : IdlingResource {

+ private val REPEAT_DELAY = 200L

+

override fun isIdleNow(): Boolean {

val editText = activityRule.activity?.findViewById(viewId) as? AutocompleteHighlightEditText

val idle = editText?.isParsing == false

if (idle) {

callback?.onTransitionToIdle()

+ } else {

+ // Check again shortly. Otherwise espresso will check again few seconds later

+ // which slows down the test.

+ editText?.postDelayed({ isIdleNow }, REPEAT_DELAY)

}

return idle

}

}But this solution also suffered from the same race conditions as the original. The solution? Track state across calls and use double-post to wait for two event loops:

class HighlightParserIdlingResource(...) : IdlingResource {

- private val REPEAT_DELAY = 200L

+ private var wasParsing = true

override fun isIdleNow(): Boolean {

val editText = activityRule.activity?.findViewById(viewId) as? AutocompleteHighlightEditText

- val parsing = editText?.isParsing ?: false

- if (!parsing) {

+ val parsing = editText?.let { it.isAttachedToWindow && it.isParsing } ?: false

+

+ // Only idle if we previously were parsing and now we're not

+ // This ensures we detect the transition, not just the state

+ val idle = !wasParsing && !parsing

+

+ if (idle) {

callback?.onTransitionToIdle()

} else {

- // Check again shortly...

- editText?.postDelayed(REPEAT_DELAY) { isIdleNow }

+ // Double-post to wait for TWO event loop cycles

+ // First post waits for parser's handler

+ // Second post waits for layout mechanisms

+ editText?.post { editText.post { isIdleNow } }

}

- return !parsing

+

+ wasParsing = parsing

+ return idle

}

}

The result: A supposedly “simple” IdlingResource for text parsing required:

- Deep knowledge of Android’s Handler posting mechanism

- Understanding of the view attachment lifecycle

- State tracking across multiple calls (wasParsing)

- Timing hacks with double-post

- Production code modifications to support testing

And despite three separate fixes over many months, it still felt fragile. This, by the way, is one IdlingResource out of six that we needed for typical tests.

This isn’t unique to our codebase; it’s an inherent challenge with Espresso’s synchronization model:

- Every async operation needs tracking - Miss one new coroutine or RxJava stream, tests become flaky

- Tight coupling to implementation - Change threading models, IdlingResources break

- Compounding fragility - With 6+ IdlingResources per test, if ANY ONE becomes stale, the entire suite destabilizes

- Knowledge burden - Engineers needed a deep understanding of the test infrastructure to write reliable tests

Enter Maestro

The unreliability of our test suite and the growing flakiness were recurring topics of discussion in my 1:1s with my manager. Having worked with Maestro in the past on three teams, we had discussed this in passing.

Maestro eliminates the maintenance burden through a fundamentally different architecture compared to Espresso. Instead of requiring your app to tell the test framework about async operations, Maestro uses a black box polling mechanism. It interacts with apps via UiAutomator and accessibility services, continuously polling the UI state without requiring knowledge of internal async operations. Instead of needing explicit synchronization hooks, Maestro simply waits for UI elements to appear and become interactable, similar to how a user would wait.

This offered significant advantages for us:

- Zero production code changes - no

idleStateproperties, no test callbacks - Implementation agnostic - doesn’t matter if you use Coroutines, RxJava, or thread pools

- Built-in retry logic - automatically handles timing variations

- Low maintenance - no

IdlingResourcesto update when you refactor

With Maestro, if we made a fundamental change to our app like switching out our reactive streams, the tests would still continue to work without any modifications because Maestro doesn’t care how the UI is updated, only that it is updated.

I decided to do a small spike with four core workflows authored as Maestro tests. This served as a baseline for the effort required to write the test and for demonstrating whether reliability improves.

Once the team agreed it felt like a promising option, we convened in Athens during our team retreat to discuss adopting Maestro and to participate in a small hackathon that got everyone up to speed on writing Maestro tests.

If you’ve worked with Maestro in the past, you might already know that writing tests is the easy part. (Go check out their docs to get started https://docs.maestro.dev/ )

For us, the first order of business was setting up the infrastructure to run Maestro tests on our CI. For this, we set up a separate build type and added a test data harness that hydrates our data layer with custom test data at the launch of the first activity.

In case you’re curious, here’s what that looks like:

class MaestroApp : App() {

private val lifecycleCallbacks = object : com.todoist.util.ActivityLifecycleCallbacks {

override fun onActivityPreCreated(activity: Activity, savedInstanceState: Bundle?) {

super.onActivityPreCreated(activity, savedInstanceState)

unregisterActivityLifecycleCallbacks(this)

val objectWriter: ObjectWriter by activity.asLocator()

/**

* "test_data" is a launch argument passed via a Maestro flow

* to configure the account & plan type it uses for testing

*

* - launchApp:

* arguments:

* test_data: free-simple

*/

val testUser = activity.intent.getStringExtra("test_data")

val featureFlagsToEnable = activity.intent.getStringExtra("enabled_feature_flags")

runBlocking {

getTestData(testUser, objectWriter)?.let {

/**

* TestDataSetupHelper is responsible for

* - mocking the API responses.

* - seeding the database with passed test data.

* - enabling the passed feature flags for testing.

*/

TestDataSetupHelper(this@MaestroApp).apply {

setupTestData(it)

setupTestApiClient(it)

featureFlagsToEnable?.split(",")?.forEach { flag ->

enableFeatureFlag(flag)

}

}

}

}

}

}

override fun onCreate() {

registerActivityLifecycleCallbacks(lifecycleCallbacks)

super.onCreate()

}

override fun createFeatureFlagManager() = TestFeatureFlagManager(

FeatureFlag.entries.associateWith { it == FeatureFlag.FooBarFlag },

)

override fun createApiClient(): ApiClient = TestApiClient(this)

private fun getTestData(testUser: String?, objectWriter: ObjectWriter) = when (testUser) {

"free-simple" -> testDataFreeSimple(objectWriter)

"free-shared-project" -> testDataFreeSharedProject()

"premium" -> testDataPremium()

"workspaces" -> testDataWorkspaces()

else -> null

}

}

private fun testDataFreeSimple(objectWriter: ObjectWriter): TestData {

val inbox = Project(id = "project_id_1", name = "Inbox", isInbox = true)

val projects = listOf(

inbox,

Project(id = "project_id_2", name = "Test Project 1"),

// otherprojects,

)

val user = User(

// Set of default user properties here,

)

val workspace = Workspace(id = "1", name = "Test workspace")

val section = Section(id = "section_id_1", name = "Section 1", projectId = inbox.id)

val sections = listOf(section)

val task1 = Item(

id = "task_id_1",

content = "Test task 1",

projectId = inbox.id,

description = "Foo\nBar",

)

val items = listOf(

task1,

// other tasks,

)

val notes = listOf(

Note(

id = "comment_id_1",

content = "Task comment",

itemId = task1.id,

projectId = null,

postedUid = user.id,

),

// other notes,

)

val filters = listOf(Filter(id = "1", name = "All tasks", query = "view all"))

val responses = buildMap {

// Set of mock responses for our API endpoints,

}

val personalLabel = Label(

"1",

"Personal_label",

itemOrder = 1,

)

val dynamicLabel1 = Label(

"2",

"Dynamic_label_1",

isDynamic = true,

)

return TestData(

user = user,

workspaces = listOf(workspace),

userPlanPair = freeTestUserPlan to testUserPlan,

projects = projects,

sections = sections,

items = items,

notes = notes,

labels = listOf(personalLabel, dynamicLabel1),

filters = filters,

responses = responses,

)

}

// ... Test data for other plans are configured similarly

private fun Any.toOkResponse(objectWriter: ObjectWriter): ApiResponse {

return TestApiClient.ok(content = objectWriter.writeValueAsString(this))

}

With this test data harness, writing tests under different account & user plan types was a one-line configuration in our Maestro flows. We then added a new GitHub action to our CI to build and upload our Maestro APK to Maestro’s Cloud for every PR with the run tests label.

name: UI Tests

on:

pull_request:

types:

- labeled

- synchronize

- opened

workflow_dispatch:

concurrency:

group: maestro-tests-${{ github.event.pull_request.number }}

cancel-in-progress: ${{github.event.action != 'labeled'}}

jobs:

maestro:

runs-on: ubicloud-standard-4

timeout-minutes: 180

if: ${{ !contains(github.event.pull_request.labels.*.name, 'skip tests') && !startsWith(github.event.pull_request.head.ref, 'rel.') }}

steps:

- name: Check for 'run tests' label

id: check_label

uses: actions/github-script@v6

with:

script: |

const { owner, repo } = context.repo;

const issue_number = context.payload.pull_request.number;

const { data: labels } = await github.rest.issues.listLabelsOnIssue({

owner,

repo,

issue_number,

});

const hasRunTestsLabel = labels.some(label => label.name === 'run tests');

core.setOutput('has_run_tests_label', hasRunTestsLabel ? 'true' : 'false');

- name: Fail if 'run tests' label is missing

if: ${{ steps.check_label.outputs.has_run_tests_label != 'true' }}

run: |

echo "The 'run tests' label is required but not present.";

exit 1;

- name: Checkout code

uses: actions/checkout@v4

with:

token: ${{ secrets.DOIST_BOT_TOKEN }}

submodules: recursive

fetch-depth: 0

- name: Copy CI gradle.properties

run: mkdir -p ~/.gradle ; cp .github/ci-4core-gradle.properties ~/.gradle/gradle.properties

- name: Setup Java

uses: actions/setup-java@v4

with:

distribution: "temurin"

java-version: "21"

- name: Setup Gradle

uses: gradle/actions/setup-gradle@v4

with:

cache-read-only: true

- name: Build APK for Maestro

run: ./gradlew :todoist-app:assembleGoogleMaestro

- name: Create folder

run: mkdir apk

- name: Move APK to folder and rename it

run: find . -name "*.apk" -exec mv '{}' apk/release.apk \;

- id: upload

uses: mobile-dev-inc/action-maestro-cloud@v1.9.8

with:

api-key: ${{ secrets.MAESTRO_API_KEY }}

project-id: ${{ secrets.PROJECT_ID }}

app-file: apk/release.apk

timeout: 60

- name: Access Outputs

if: always()

run: |

echo "Console URL: ${{ steps.upload.outputs.MAESTRO_CLOUD_CONSOLE_URL }}"

echo "Flow Results: ${{ steps.upload.outputs.MAESTRO_CLOUD_FLOW_RESULTS }}"

echo "Upload Status: ${{ steps.upload.outputs.MAESTRO_CLOUD_UPLOAD_STATUS }}"

echo "App Binary ID: ${{ steps.upload.outputs.MAESTRO_CLOUD_APP_BINARY_ID }}"Once the infrastructure was ready, we began migrating our core Espresso workflow tests to Maestro as part of our team’s Housekeeping initiative. At Doist, we reserve one day per week for “housekeeping”, where we pay off tech debt and claim back time for internal improvements.

Over the course of 4 Housekeeping days and leveraging the time of 9 team members, we chipped away at our test suite and, in the end, converted 63 tests from Espresso to Maestro.

The first roadblock

As we incrementally adopted Maestro and increased the number of core workflows we covered, we noticed an issue: with each test we added, our test suite’s total runtime increased by 2-4 minutes, regardless of whether the test ran for 10 seconds or 10 minutes.

This was puzzling because the runtime would soon inflate dramatically and end up as time-consuming as our Espresso test suite on Firebase Test Lab.

After discussing this with the folks over at Maestro, we realized an interesting nuance about their cloud platform where we run our tests.

“The cloud environment optimises for repeatability. One device, between one test and the next, is wiped and recreated, so that there’s no chance of one test ever affecting another.”

This meant that between each test (no matter how short or long running), there was a delay for

- Stashing the test results

- Wiping & rebooting the test device

- Transferring & reinstalling the app.

To address this, we had to rethink how we organized our test flows. Instead of running all flows individually, which would have a significant runtime cost, we decided to batch multiple flows into a parent flow.

By having a parent flow target a workflow category, we reduce the number of cleanups and reprovisions needed. While this theoretically comes at a cost of reliability, so far it’s been working for us just fine.

With this approach, we reduced the run targets from 63 individual flows to 10 parent flows that orchestrate the subflows.

Here’s what one such parent flow looks like

appId: com.todoist.debug

name: "Quick add flows"

---

- runFlow: flows/quick-add-add-to-project.yaml

- runFlow: flows/quick-add-item.yaml

- runFlow: flows/quick-add-item-recurring-due-start-date-using-scheduler.yaml

- runFlow: flows/quick-add-parsing.yaml

- runFlow: flows/quick-add-paste-multiple.yaml

- runFlow: flows/quick-add-pills.yaml

- runFlow: flows/quick-add-schedule-button.yaml

- runFlow: flows/quick-add-schedule-duration.yamlWith this strategy, we cut our runtime by a factor of 4, from ~80 minutes to under 20 minutes.

Monitoring test failures

Unlike most workplaces where teams use Slack for internal communication, we like to do things a little differently at Doist. We are async-first and use our own product, Twist , for our internal communication.

This meant the handy Slack integration Maestro provides for reporting test failures would not work for us.

To solve this, we decided to build our own custom report tooling that:

- Receives test run reports from Maestro via a webhook notification

- Filters and stores the failed runs

- Sends us a report on the failed runs every workday via a message on a Twist Channel.

To receive webhook notifications, we decided to use Firebase Cloud Functions , a serverless framework that lets us run backend code in response to background events.

Here’s what the function looks like

const { onRequest } = require("firebase-functions/v2/https");

const admin = require("firebase-admin");

const { logger } = require("firebase-functions");

// Initialize Firebase Admin SDK.

admin.initializeApp();

const db = admin.firestore();

/**

* Maestro Webhook Handler

* Receives webhook notifications from Maestro Cloud when test runs complete.

* Stores failed test results in Firestore for later reporting.

*/

exports.maestroWebhook = onRequest(

{

cors: true,

},

async (request, response) => {

// Log incoming request for debugging

logger.info("Received webhook request", {

method: request.method,

headers: request.headers,

});

// Only accept POST requests

if (request.method !== "POST") {

logger.warn("Invalid method", { method: request.method });

response.status(405).send("Method Not Allowed");

return;

}

// Validate webhook token

// For local testing, use MAESTRO_WEBHOOK_TOKEN env var

// For production, this should be set via Firebase secrets

const authHeader = request.headers.authorization;

const expectedToken = process.env.MAESTRO_WEBHOOK_TOKEN;

if (!authHeader || !authHeader.startsWith("Bearer ")) {

logger.warn("Missing or invalid authorization header");

response.status(401).send("Unauthorized: Missing token");

return;

}

const token = authHeader.split("Bearer ")[1];

if (token !== expectedToken) {

logger.warn("Invalid webhook token");

response.status(401).send("Unauthorized: Invalid token");

return;

}

try {

// Parse webhook payload

const payload = request.body;

logger.info("Processing webhook payload", {

uploadId: payload.id,

name: payload.name,

platform: payload.platform,

flowCount: payload.flows?.length,

});

// Filter for failed flows only

const failedFlows = payload.flows.filter(

(flow) => flow.status === "FAILURE",

);

if (failedFlows.length === 0) {

logger.info("No failed flows, skipping storage", {

uploadId: payload.id,

});

response.status(200).send();

return;

}

// Prepare document to store in Firestore

const testResult = {

uploadId: payload.id,

uploadName: payload.name,

uploadUrl: payload.url,

platform: payload.platform,

appId: payload.appId,

githubBranch: payload.githubBranch,

startTime: payload.startTime,

endTime: payload.endTime,

timestamp: new Date(),

failedFlows: failedFlows.map((flow) => ({

id: flow.id,

name: flow.name,

url: flow.url,

failureReason: flow.failureReason,

startTime: flow.startTime,

endTime: flow.endTime,

})),

totalFlows: payload.flows.length,

failedCount: failedFlows.length,

};

// Store in Firestore collection 'maestro-test-results'

const docRef = await db

.collection("maestro-test-results")

.add(testResult);

logger.info("Successfully stored test results", {

docId: docRef.id,

uploadId: payload.id,

failedCount: failedFlows.length,

});

response.status(200).send();

} catch (error) {

logger.error("Error processing webhook", error);

response.status(500).send("Internal server error");

}

},

);These 100 lines of JavaScript code do a few things. It:

- Accepts POST webhooks from Maestro Cloud when test runs finish

- Filters for failed tests only (ignores successful runs)

- Stores failure details in a Firebase Firestore database

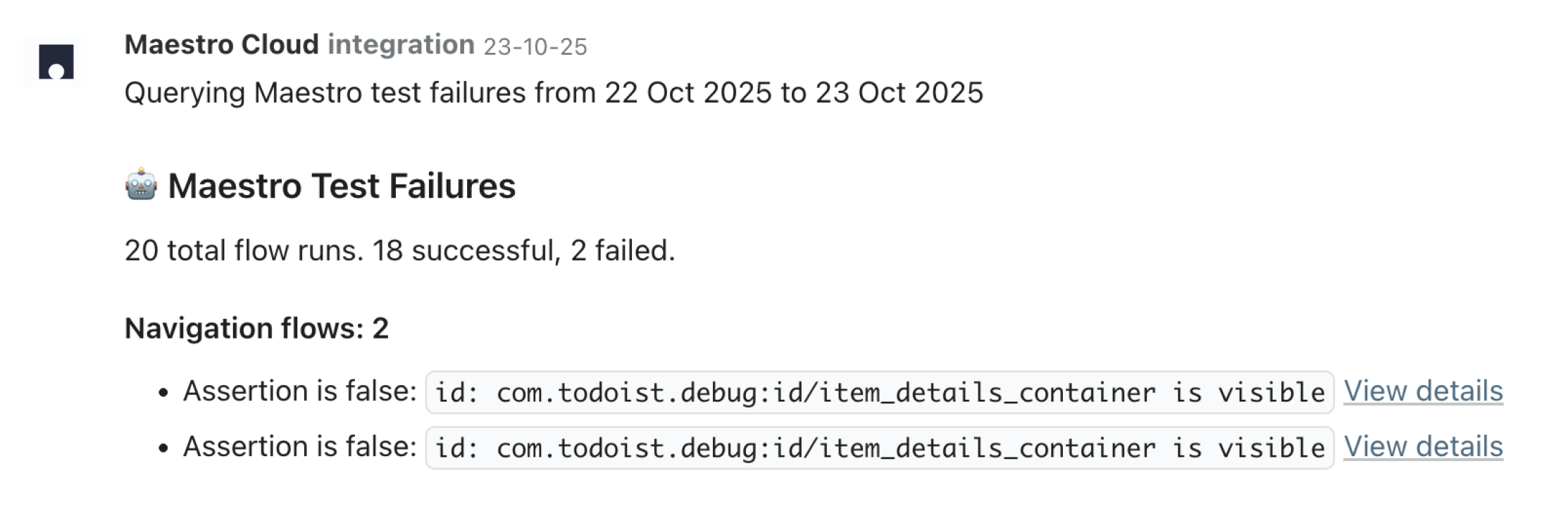

Throughout the day, we continue to collect test reports in our database. At the beginning of the next day, we run a dedicated GitHub Action to query the database for failures and build a report, which is posted on our Twist Channel.

Here’s what that action looks like.

name: Report failed Maestro tests on Twist

on:

schedule:

# Run at 01:30 on Mon-Fri

- cron: "30 1 * * 1-5"

workflow_dispatch:

jobs:

report-maestro-tests:

runs-on: ubuntu-latest

timeout-minutes: 10

steps:

- name: Checkout

uses: actions/checkout@v4

- name: Setup Node.js

uses: actions/setup-node@v4

with:

node-version: "22"

cache: "npm"

cache-dependency-path: ".github/scripts/package-lock.json"

- name: Install dependencies

run: |

cd .github/scripts

npm install

- name: Authenticate with Google Cloud

uses: google-github-actions/auth@v2

with:

credentials_json: ${{ secrets.MAESTRO_DEV_SERVICE_ACCOUNT }}

- name: Query Firestore and generate report

id: report

run: |

cd .github/scripts

REPORT=$(node report-maestro-failures.js)

if [ -n "$REPORT" ]; then

echo "report<<EOF" >> $GITHUB_OUTPUT

echo "$REPORT" >> $GITHUB_OUTPUT

echo "EOF" >> $GITHUB_OUTPUT

fi

- name: Share report in Twist

# Report only if the report is not empty

if: ${{ steps.report.outputs.report != '' }}

uses: Doist/twist-post-action@master

with:

message: ${{ steps.report.outputs.report }}

install_id: ${{ secrets.TWIST_TODOIST_FAILED_TESTS_INSTALL_ID }}

install_token: ${{ secrets.TWIST_TODOIST_FAILED_TESTS_INSTALL_TOKEN }}

- name: If failed, ping Android team

if: ${{ failure() }}

uses: Doist/twist-post-action@master

with:

message: "❌ Maestro report action failed: https://github.com/Doist/Todoist-Android/actions/runs/${{ github.run_id }}"

install_id: ${{ secrets.TWIST_TODOIST_FAILED_TESTS_INSTALL_ID }}

install_token: ${{ secrets.TWIST_TODOIST_FAILED_TESTS_INSTALL_TOKEN }}And here’s the code that queries and formats the failure reports

#!/usr/bin/env node

/**

* Query Firestore for failed Maestro tests from yesterday and format a report for Twist.

*/

const { Firestore } = require("@google-cloud/firestore");

async function main() {

// Initialize Firestore.

const firestore = new Firestore({

projectId: process.env.FIRESTORE_PROJECT_ID,

});

// Calculate yesterday's date range.

const now = new Date();

const yesterday = new Date(now);

yesterday.setDate(yesterday.getDate() - 1);

yesterday.setHours(0, 0, 0, 0);

const today = new Date(now);

today.setHours(0, 0, 0, 0);

// Format dates as "dd MMM YYYY".

const formatDate = (date) => {

const months = [

"Jan",

"Feb",

"Mar",

"Apr",

"May",

"Jun",

"Jul",

"Aug",

"Sep",

"Oct",

"Nov",

"Dec",

];

const day = date.getDate().toString().padStart(2, "0");

const month = months[date.getMonth()];

const year = date.getFullYear();

return `${day} ${month} ${year}`;

};

console.log(

`Querying Maestro test failures from ${formatDate(yesterday)} to ${formatDate(today)}`,

);

// Query Firestore for test results from yesterday.

const snapshot = await firestore

.collection("maestro-test-results")

.where("timestamp", ">=", yesterday)

.where("timestamp", "<", today)

.orderBy("timestamp", "desc")

.get();

if (snapshot.empty) {

console.log("🎉 No Maestro test failures found for yesterday 🎉");

process.exit(0);

}

// Aggregate data across all uploads.

let totalFlowRuns = 0;

let totalSuccessful = 0;

let totalFailed = 0;

const failuresByFlow = new Map(); // Map<flowName, Array<{reason, url}>>.

snapshot.forEach((doc) => {

const data = doc.data();

totalFlowRuns += data.totalFlows;

totalFailed += data.failedCount;

totalSuccessful += data.totalFlows - data.failedCount;

// Group failures by flow name.

data.failedFlows.forEach((flow) => {

if (!failuresByFlow.has(flow.name)) {

failuresByFlow.set(flow.name, []);

}

failuresByFlow.get(flow.name).push({

reason: flow.failureReason,

url: flow.url,

});

});

});

// Format the report.

let report = "## 🤖 Maestro Test Failures\n\n";

report += `${totalFlowRuns} total flow runs. ${totalSuccessful} successful, ${totalFailed} failed.\n\n`;

// Sort flows by failure count (descending).

const sortedFlows = Array.from(failuresByFlow.entries()).sort(

(a, b) => b[1].length - a[1].length,

);

sortedFlows.forEach(([flowName, failures]) => {

report += `**${flowName}: ${failures.length}**\n\n`;

failures.forEach((failure) => {

// If the reason contains a ":", wrap everything after the first ":" in backticks.

const formattedReason = failure.reason.replace(

/^([^:]+:)\s*(.+)$/,

"$1 `$2`",

);

report += `- ${formattedReason} [View details](${failure.url})\n`;

});

report += "\n";

});

// Output the report.

console.log(report);

}

main().catch((error) => {

console.error("Error querying Firestore:", error);

process.exit(1);

});With our custom reporting tooling deployed, we get daily insights into the health of our test suite.

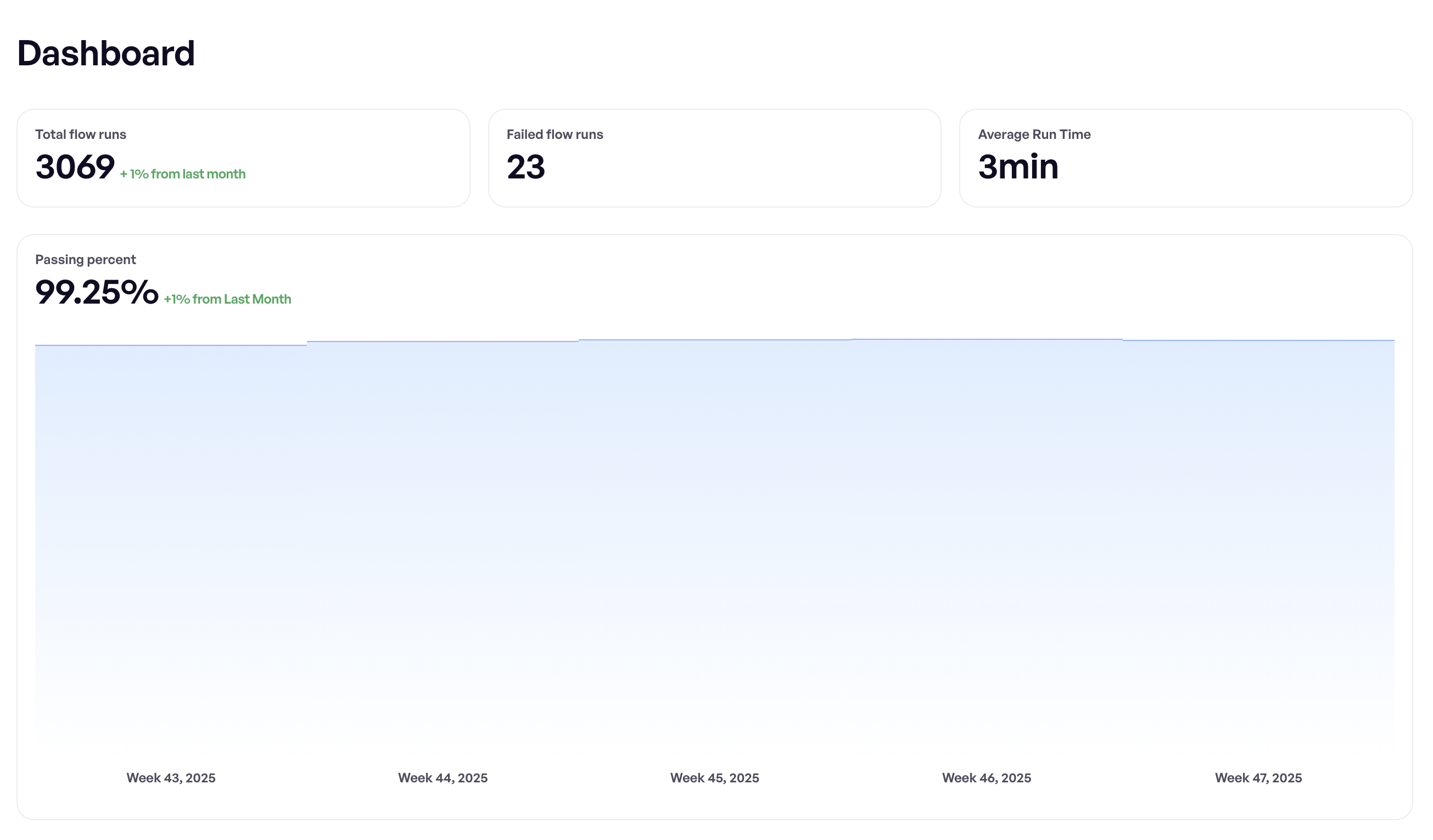

Did Maestro work for us?

It took us a few months of collective effort to migrate our core workflows from Espresso to Maestro, but I am happy to share that this migration has been a huge success. Our test suite’s success rate now averages ~99%, up from the abysmal 50% previously.

This has not only helped us deliver value faster but also with high quality and confidence. Since the effort required to author Maestro tests is low, we’ve also seen team members voluntarily increase our test coverage. When things do get flaky, investigating and fixing Maestro tests takes a fraction of the time it takes with Espresso.

While we give up fine-grained control over synchronization with Maestro’s black-box approach, for our use case, the tradeoffs are worthwhile. We gained reliability and reduced maintenance burden in exchange for less visibility into our app’s internal state during tests. It’s important to note that Maestro isn’t inherently more stable than Espresso, they’re just different paradigms. Espresso’s grey-box approach offers precise control for teams who can maintain comprehensive IdlingResource coverage. Maestro’s black-box approach offers simplicity for teams who prioritize test maintenance velocity over internal visibility.

Adopting Maestro for our end-to-end tests has also resulted in significant monetary savings. Unlike Firebase Test Lab, where we are billed on the run time of our tests (yes, even the flaky runs), on Maestro, we pay a subscription fee for the number of devices we can run our tests on, which means we pay a fixed cost each month, regardless of our runtime. To put this into perspective, at its peak, we were being billed ~$2000 per month for our Firebase Test Lab usage, which came down by 50% after we migrated to Maestro.

All in all, Maestro has been a significant upgrade in how we test and ship the Todoist Android app. This work is far from done; we’re still increasing our test coverage and aiming to reach the 99th percentile in test stability month over month. Certain missing features in Maestro have prevented us from going all in; one such key feature is drag-and-drop support. We’re confident that our usage will continue to increase as Maestro evolves. We hope that by sharing our experience adopting and setting up Maestro, it will spark your curiosity and help shape your strategy. If you have any questions, please let us know.